[1]

M. Claypool, "Motion and scene complexity for streaming video games," in

Proceedings of the 4th International Conference on Foundations of

Digital Games. ACM, 2009, pp. 34-41.

[2]

P. Ross, "Cloud computing's killer app: Gaming,"

Spectrum, IEEE,

vol. 46, no. 3, p. 14, 2009.

[3]

I. Nave, H. David, A. Shani, Y. Tzruya, A. Laikari, P. Eisert, and

P. Fechteler, "Games@Large graphics streaming architecture," in

IEEE International Symposium on Consumer Electronics 2008. IEEE, 2008.

[4]

A. Jurgelionis, P. Fechteler, P. Eisert, F. Bellotti, H. David, J. P.

Laulajainen, R. Carmichael, V. Poulopoulos, A. Laikari, P. Perälä,

A. De Gloria, and C. Bouras, "Platform for distributed 3d gaming,"

Int. J. Comput. Games Technol., vol. 2009, pp. 1:1-1:15, January

2009.

[5]

D. De Winter, P. Simoens, L. Deboosere, F. De Turck, J. Moreau, B. Dhoedt, and

P. Demeester, "A hybrid thin-client protocol for multimedia streaming and

interactive gaming applications," in

Proceedings of ACM NOSSDAV

2006. ACM, 2006, pp. 15:1-15:6.

[6]

O.-I. Holthe, O. Mogstad, and L. A. Rønningen, "Geelix livegames: remote

playing of video games," in

Proceedings of IEEE CCNC 2009. IEEE Press, 2009, pp. 758-759.

[7]

P. Eisert and P. Fechteler, "Low delay streaming of computer graphics," in

Proceedings of IEEE ICIP 2008, 2008, pp. 2704-2707.

[8]

C.-Y. Huang, C.-H. Hsu, Y.-C. Chang, and K.-T. Chen, "

GamingAnywhere: An Open Cloud Gaming System," in

Proc. of ACM MMSys 2013, February 2013.

[9]

A. M. Lai and J. Nieh, "On the performance of wide-area thin-client

computing,"

ACM Trans. Comput. Syst., vol. 24, pp. 175-209, May

2006.

[10]

J. Nieh, S. J. Yang, and N. Novik, "Measuring thin-client performance using

slow-motion benchmarking,"

ACM Trans. Comput. Syst., vol. 21, pp.

87-115, February 2003.

[11]

A. Y.-l. Wong and M. Seltzer, "Evaluating windows NT terminal server

performance," in

Proceedings of USENIX Windows NT Symposium. USENIX Association, 1999, pp. 15-15.

[12]

K. Packard and K. Packard, "X window system network performance," in

USENIX Annual Technical Conference, 2003.

[13]

N. Tolia, D. Andersen, and M. Satyanarayanan, "Quantifying interactive user

experience on thin clients,"

Computer, vol. 39, no. 3, pp. 46-52,

2006.

[14]

T. Richardson, Q. Stafford-Fraser, K. Wood, and A. Hopper, "Virtual network

computing,"

IEEE Internet Computing, vol. 2, no. 1, pp. 33-38, 2002.

[15]

H. A. Lagar-Cavilla, N. Tolia, E. de Lara, M. Satyanarayanan, and

D. O'Hallaron, "Interactive resource-intensive applications made easy," in

Proceedings of the ACM/IFIP/USENIX 2007 International Conference on

Middleware, 2007, pp. 143-163.

[16]

Y.-C. Chang, P.-H. Tseng, K.-T. Chen, and C.-L. Lei, "

Understanding The Performance of Thin-Client Gaming," in

Proceedings of IEEE CQR 2011,

May 2011.

[17]

Y.-T. Lee, K.-T. Chen, H.-I. Su, and C.-L. Lei, "

Are All Games Equally Cloud-Gaming-Friendly? An Electromyographic Approach," in

Proceedings

of IEEE/ACM NetGames 2012, Oct 2012.

[18]

M. Claypool, D. Finkel, A. Grant, and M. Solano, "Thin to win? network

performance analysis of the OnLive thin client game system," in

ACM

Workshop on Network and Systems Support for Games (NetGames), 2012, pp.

1-6.

[19]

K.-T. Chen, Y.-C. Chang, P.-H. Tseng, C.-Y. Huang, and C.-L. Lei, "

Measuring The Latency of Cloud Gaming Systems," in

Proceedings of ACM Multimedia

2011, Nov 2011.

[20]

GameStats. [Online]. Available:

http://www.gamestats.com/index/gpm/pc.html

[21]

M. Claypool and K. Claypool, "Latency and player actions in online games,"

Commun. ACM, vol. 49, pp. 40-45, November 2006.

[22]

G. Hunt and D. Brubacher, "Detours: binary interception of win32 functions,"

in

Proceedings of the 3rd conference on USENIX Windows NT Symposium -

Volume 3, ser. WINSYM'99. Berkeley,

CA, USA: USENIX Association, 1999, pp. 14-14. [Online]. Available:

http://dl.acm.org/citation.cfm?id=1268427.1268441

[23]

S. G. Perlman and R. V. D. Laan, "System and method for compressing streaming

interactive video," US Patent No. 2009/0119736A1, May 2009.

[24]

K. Claypool and M. Claypool, "On frame rate and player performance in first

person shooter games,"

Multimedia Systems, vol. 13, pp. 3-17, 2007.

[25]

Z. Wang, A. Bovik, H. Sheikh, and E. Simoncelli, "Image quality assessment:

from error visibility to structural similarity,"

IEEE Transactions on

Image Processing, vol. 13, no. 4, pp. 600-612, April 2004.

[26]

S. Choy, B. Wong, G. Simon, and C. Rosenberg, "The brewing storm in cloud

gaming: A measurement study on cloud to end-user latency," in

ACM

Workshop on Network and Systems Support for Games (NetGames), 2012, pp.

1-6.

(a) BioShock, complex, entropy 2,114 bytes

(a) BioShock, complex, entropy 2,114 bytes

(b) BioShock, normal, entropy 1,645 bytes

(b) BioShock, normal, entropy 1,645 bytes

(c) BioShock, simple, entropy 401 bytes

(c) BioShock, simple, entropy 401 bytes

(d) Rome, complex, entropy 2,877 bytes

(d) Rome, complex, entropy 2,877 bytes

(e) Rome, normal, entropy 2,760 bytes

(e) Rome, normal, entropy 2,760 bytes

(f) Rome, simple, entropy 2,322 bytes

(f) Rome, simple, entropy 2,322 bytes

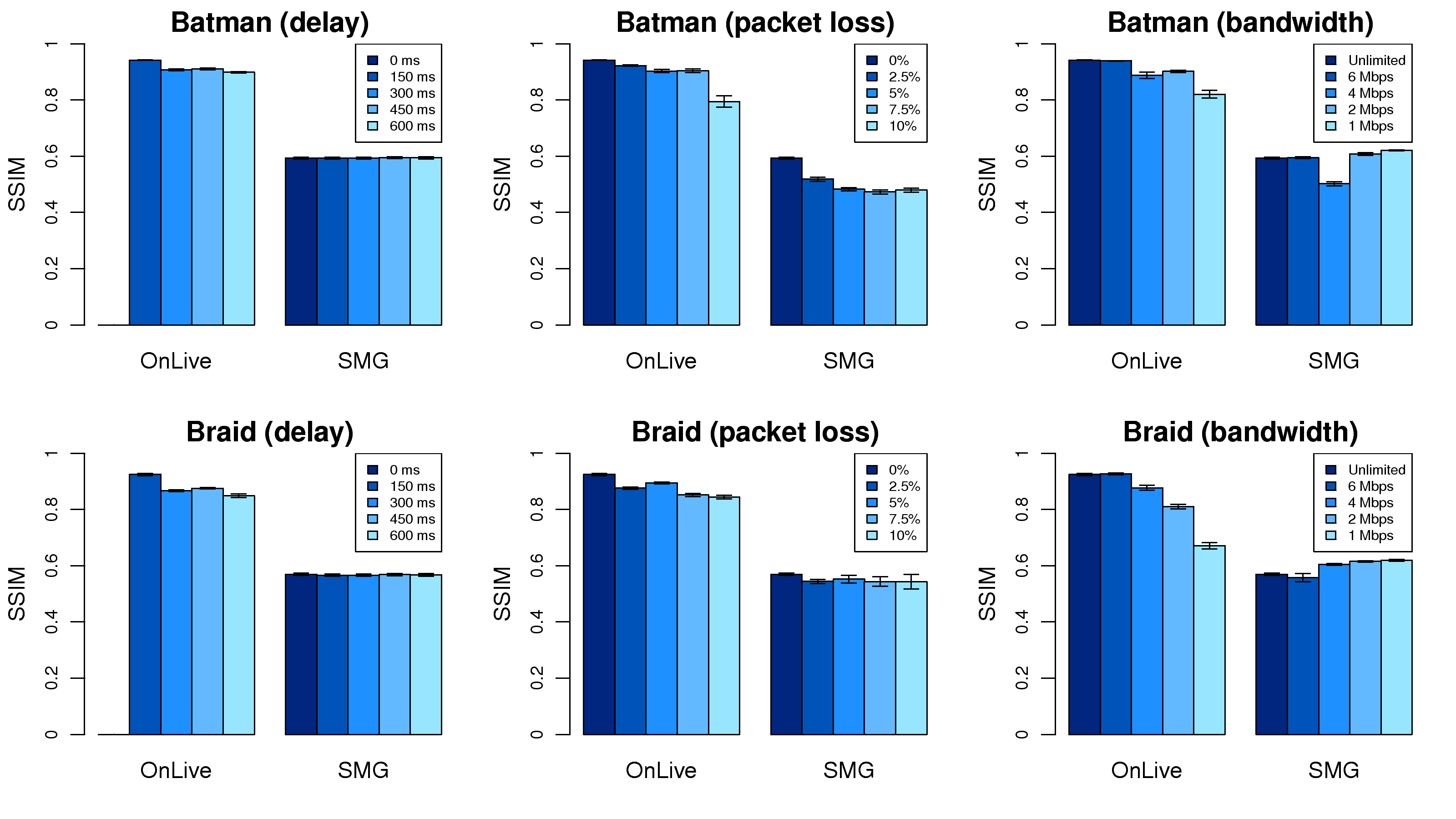

(a) OnLive, no degradation, SSIM 0.94

(a) OnLive, no degradation, SSIM 0.94

(b) OnLive, bandwidth 4 Mbps, SSIM 0.89

(b) OnLive, bandwidth 4 Mbps, SSIM 0.89

(c) OnLive, packet loss rate 10%, SSIM 0.79

(c) OnLive, packet loss rate 10%, SSIM 0.79

(d) SMG, no degradation, SSIM 0.59

(d) SMG, no degradation, SSIM 0.59

(e) SMG, bandwidth 4 Mbps, SSIM 0.50

(e) SMG, bandwidth 4 Mbps, SSIM 0.50

(f) SMG, packet loss rate 10%, SSIM 0.48

(f) SMG, packet loss rate 10%, SSIM 0.48