Page 53 - My FlipBook

P. 53

Brochure 2020

In the second year of the project (2019), we focused on using endeavored to maximize the di erence in weight features

computer vision technology to detect vehicles, to compute that can be obtained for each layer, permitting the CNN to

the length of car queues, and to estimate car speed as well maintain a high level of learning ability. This system can

as other parameters. We decided to design a new machine- be applied to all current mainstream CNN architectures

learning model to meet the requirements of real-time including ResNet, ResNeXt, and DenseNet, amongst

edge computing. My research team then proposed a new others, and it maintains or improves the accuracy of

deep learning-based neural network model that we named image classi cation, reducing by 10-30% the various CNN

Cross Stage Partial Network (CSPNet). The main concept computations. Since we designed CSPNet with a view to

underlying CSPNet is optimization of the transmission path computing costs, load balancing, and memory bandwidth

strategy of gradient ow for the back propagation process (reduced by 40-80%), it is more suitable for edge computing

of a Convolutional Neural Network (CNN) architecture. We platforms with limited resources.

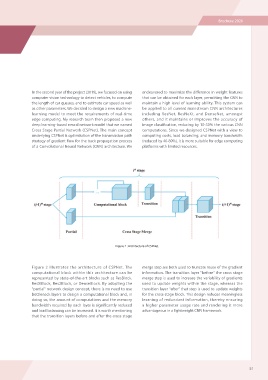

Figure 2 : Architecture of CSPNet.

Figure 2 illustrates the architecture of CSPNet. The merge step are both used to truncate reuse of the gradient

computational block within this architecture can be information. The transition layer "before" the cross stage

represented by state-of-the-art blocks such as ResBlock, merge step is used to increase the variability of gradients

ResXBlock, Res2Block, or DenseBlock. By adopting the used to update weights within the stage, whereas the

"partial" network design concept, there is no need to use transition layer "after" that step is used to update weights

bottleneck layers to design a computational block and, in for the cross-stage block. This design reduces meaningless

doing so, the amount of computations and the memory learning of redundant information, thereby ensuring

bandwidth required by each layer is significantly reduced a higher parameter usage rate and rendering it more

and load balancing can be increased. It is worth mentioning advantageous in a lightweight CNN framework.

that the transition layers before and after the cross stage

51

In the second year of the project (2019), we focused on using endeavored to maximize the di erence in weight features

computer vision technology to detect vehicles, to compute that can be obtained for each layer, permitting the CNN to

the length of car queues, and to estimate car speed as well maintain a high level of learning ability. This system can

as other parameters. We decided to design a new machine- be applied to all current mainstream CNN architectures

learning model to meet the requirements of real-time including ResNet, ResNeXt, and DenseNet, amongst

edge computing. My research team then proposed a new others, and it maintains or improves the accuracy of

deep learning-based neural network model that we named image classi cation, reducing by 10-30% the various CNN

Cross Stage Partial Network (CSPNet). The main concept computations. Since we designed CSPNet with a view to

underlying CSPNet is optimization of the transmission path computing costs, load balancing, and memory bandwidth

strategy of gradient ow for the back propagation process (reduced by 40-80%), it is more suitable for edge computing

of a Convolutional Neural Network (CNN) architecture. We platforms with limited resources.

Figure 2 : Architecture of CSPNet.

Figure 2 illustrates the architecture of CSPNet. The merge step are both used to truncate reuse of the gradient

computational block within this architecture can be information. The transition layer "before" the cross stage

represented by state-of-the-art blocks such as ResBlock, merge step is used to increase the variability of gradients

ResXBlock, Res2Block, or DenseBlock. By adopting the used to update weights within the stage, whereas the

"partial" network design concept, there is no need to use transition layer "after" that step is used to update weights

bottleneck layers to design a computational block and, in for the cross-stage block. This design reduces meaningless

doing so, the amount of computations and the memory learning of redundant information, thereby ensuring

bandwidth required by each layer is significantly reduced a higher parameter usage rate and rendering it more

and load balancing can be increased. It is worth mentioning advantageous in a lightweight CNN framework.

that the transition layers before and after the cross stage

51