Page 47 - My FlipBook

P. 47

Brochure 2020

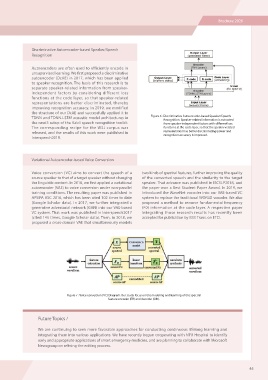

Discriminative Autoencoder-based Speaker/Speech Figure 6 : Discriminative Autoencoder-based Speaker/Speech

Recognition Recognition. Speaker-related information is extracted

from speaker-independent factors with di erent loss

Autoencoders are often used to efficiently encode in functions at the code layer, so that the speaker-related

unsupervised learning. We rst proposed a discriminative representation has better discriminating power and

autoencoder (DcAE) in 2017, which has been applied recognition accuracy is improved.

to speaker recognition. The basis of this research is to

separate speaker-related information from speaker-

independent factors by considering different loss

functions at the code layer, so that speaker-related

representations are better discriminated, thereby

improving recognition accuracy. In 2019, we modified

the structure of our DcAE and successfully applied it to

TDNN and TDNN-LSTM acoustic model architectures in

the nnet3 setup of the Kaldi speech recognition toolkit.

The corresponding recipe for the WSJ corpus was

released, and the results of this work were published in

Interspeech2019.

Variational Autoencoder-based Voice Conversion two kinds of spectral features, further improving the quality

of the converted speech and the similarity to the target

Voice conversion (VC) aims to convert the speech of a speaker. That advance was published in ISCSLP2018, and

source speaker to that of a target speaker without changing the paper won a Best Student Paper Award. In 2019, we

the linguistic content. In 2016, we rst applied a variational introduced the WaveNet vocoder into our VAE-based VC

autoencoder (VAE) to voice conversion under non-parallel system to replace the traditional WORLD vocoder. We also

training conditions. The resulting paper was published in proposed a method to remove fundamental frequency

APSIPA ASC 2016, which has been cited 102 times to date (F0) information at the code layer. A respective paper

(Google Scholar data). In 2017, we further integrated a integrating those research results has recently been

generative adversarial network (GAN) into our VAE-based accepted for publication by IEEE Trans. on ETCI.

VC system. That work was published in Interspeech2017

(cited 145 times, Google Scholar data). Then, in 2018, we

proposed a cross-domain VAE that simultaneously models

Figure 7 : Voice conversion (VC) Diagram. Our study focus on the modeling and learning of the spectral

feature encoder (Eθ) and decoder (GΦ).

Future Topics /

We are continuing to seek more favorable approaches for conducting continuous lifelong learning and

integrating them into various applications. We have recently begun cooperating with NTU Hospital to identify

early and appropriate applications of smart emergency medicine, and are planning to collaborate with Microsoft

Newsgroup on re ning the editing process.

45

Discriminative Autoencoder-based Speaker/Speech Figure 6 : Discriminative Autoencoder-based Speaker/Speech

Recognition Recognition. Speaker-related information is extracted

from speaker-independent factors with di erent loss

Autoencoders are often used to efficiently encode in functions at the code layer, so that the speaker-related

unsupervised learning. We rst proposed a discriminative representation has better discriminating power and

autoencoder (DcAE) in 2017, which has been applied recognition accuracy is improved.

to speaker recognition. The basis of this research is to

separate speaker-related information from speaker-

independent factors by considering different loss

functions at the code layer, so that speaker-related

representations are better discriminated, thereby

improving recognition accuracy. In 2019, we modified

the structure of our DcAE and successfully applied it to

TDNN and TDNN-LSTM acoustic model architectures in

the nnet3 setup of the Kaldi speech recognition toolkit.

The corresponding recipe for the WSJ corpus was

released, and the results of this work were published in

Interspeech2019.

Variational Autoencoder-based Voice Conversion two kinds of spectral features, further improving the quality

of the converted speech and the similarity to the target

Voice conversion (VC) aims to convert the speech of a speaker. That advance was published in ISCSLP2018, and

source speaker to that of a target speaker without changing the paper won a Best Student Paper Award. In 2019, we

the linguistic content. In 2016, we rst applied a variational introduced the WaveNet vocoder into our VAE-based VC

autoencoder (VAE) to voice conversion under non-parallel system to replace the traditional WORLD vocoder. We also

training conditions. The resulting paper was published in proposed a method to remove fundamental frequency

APSIPA ASC 2016, which has been cited 102 times to date (F0) information at the code layer. A respective paper

(Google Scholar data). In 2017, we further integrated a integrating those research results has recently been

generative adversarial network (GAN) into our VAE-based accepted for publication by IEEE Trans. on ETCI.

VC system. That work was published in Interspeech2017

(cited 145 times, Google Scholar data). Then, in 2018, we

proposed a cross-domain VAE that simultaneously models

Figure 7 : Voice conversion (VC) Diagram. Our study focus on the modeling and learning of the spectral

feature encoder (Eθ) and decoder (GΦ).

Future Topics /

We are continuing to seek more favorable approaches for conducting continuous lifelong learning and

integrating them into various applications. We have recently begun cooperating with NTU Hospital to identify

early and appropriate applications of smart emergency medicine, and are planning to collaborate with Microsoft

Newsgroup on re ning the editing process.

45